Recovering Lost Texts: Rebuilding Lost Manuscripts

By

Julia Craig-McFeely

September 2018

¶ 1Leave a comment on paragraph 1 0

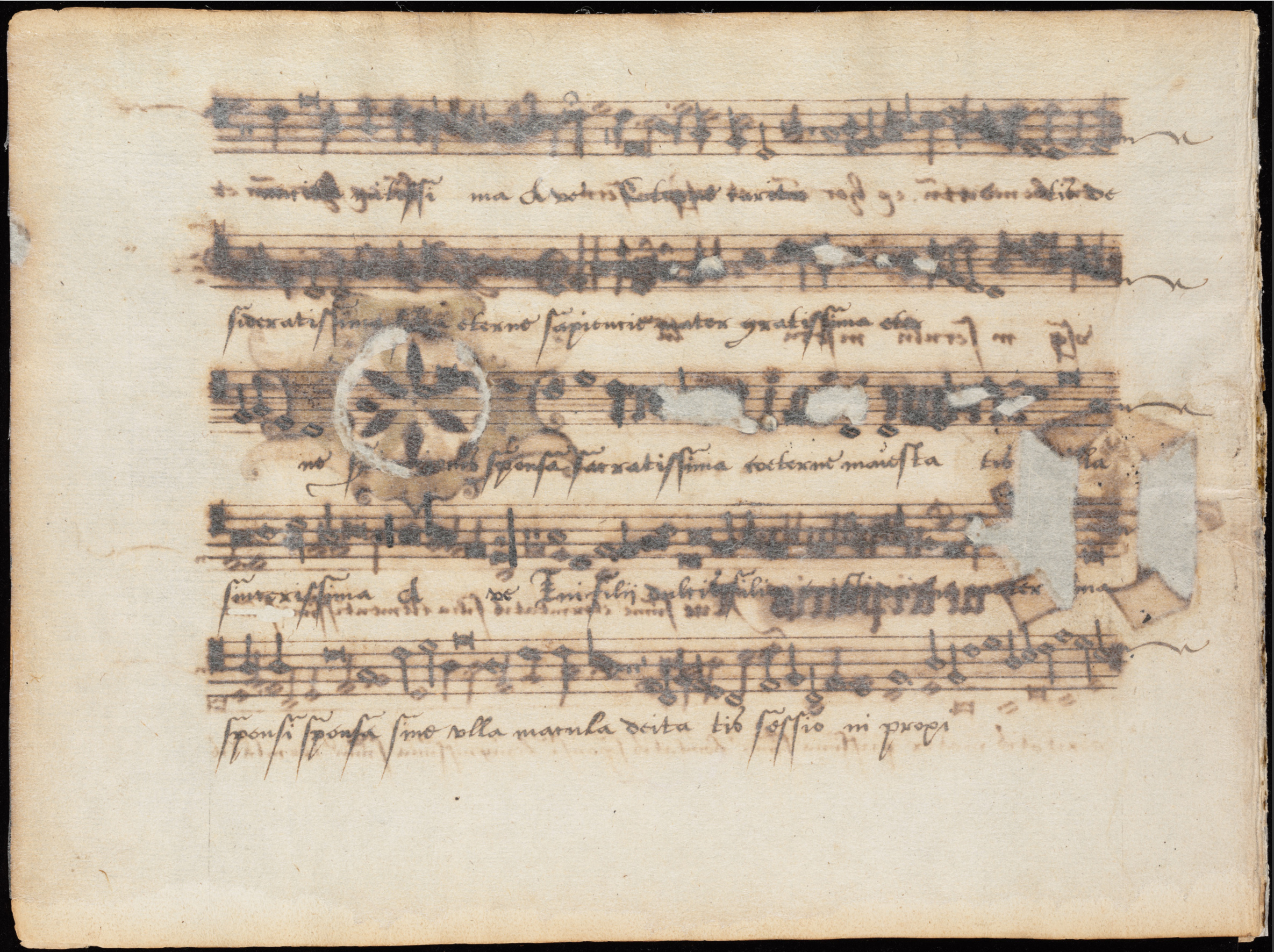

Acid burn-through is a problem particularly prevalent in the earliest paper sources, where acidity and poor paper quality coupled with thick, acidic ink recipes (where frequently the liquid part of the recipe came from red wine and/or vinegar) have led to serious deterioration, particularly in the last 150 years.1

¶ 2Leave a comment on paragraph 2 0

It is, however, also to be found in parchment manuscripts throughout the medieval period where iron gall inks were used.2

¶ 3Leave a comment on paragraph 3 0

The result today is a number of manuscripts that are unreadable and often also withdrawn from public access because the acid damage has rendered them brittle and too delicate to handle.3 The only way to stabilize a leaf after de-acidification is often to overlay it with gauze or Japanese tissue which, although supporting the physical structure, makes the text even more difficult to read.4 Having these manuscripts digitized is an obvious way of preserving them from handling, though priority lists, pre-photography conservation, and digitization costs often mean that these manuscripts languish too long, sometimes deteriorating further, despite the urgency of a record of them being made while it is still possible to read something.

¶ 4Leave a comment on paragraph 4 0

This article presents an overview of a recent digital repair project undertaken by a team at the University of Oxford as part of the Tudor Partbooks project.5 This project provided valuable lessons both in methodology and scalability that affected not only the end appearance of the images but ethical decisions regarding the manner and extent of intervention in the damage, which may inform decisions about similar projects and perhaps provide scholars who work with documents rendered difficult by this type of damage with food for thought.

Background

¶ 5Leave a comment on paragraph 5 0

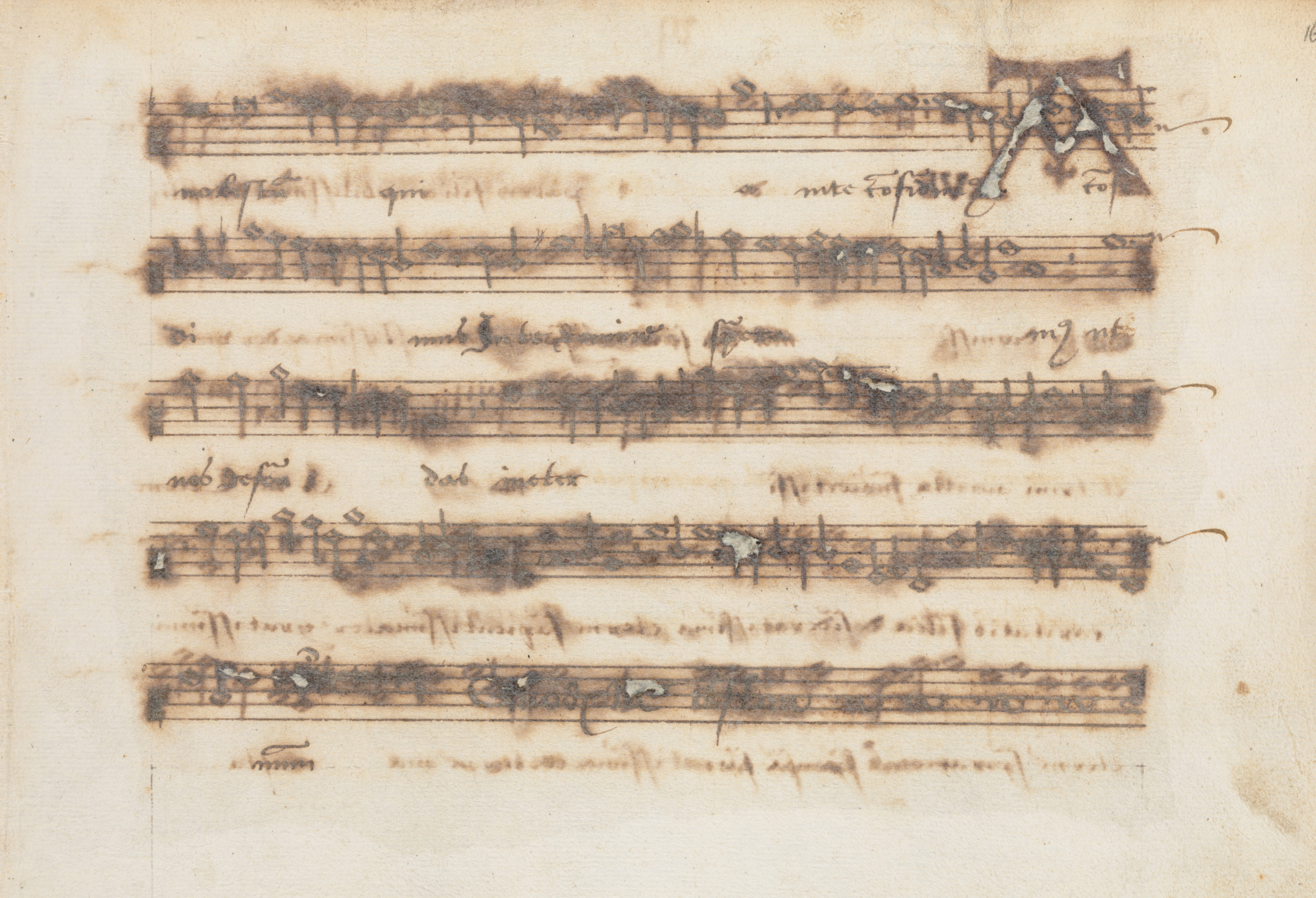

In November 2003, I digitized a large late-medieval paper-and-parchment music manuscript (MS) in Bologna, at the Museo Internazionale e Biblioteca della Musica di Bologna.6 MS Q. 15 (hereinafter Q15) was completed in the mid 1440s. The destination for the images was a facsimile published with an introductory study by Margaret Bent that had been several decades in the making.7 The images were reproduced as shot (apart from trimming away unwanted background around the page edge) with no attempt to brighten or clean dirty pages, and matching the color as accurately as possible to that of the original manuscript.8 Although the manuscript was in excellent condition overall, a few of the paper leaves had suffered significant burn-through due to the acidity of the ink compounded by the acidity of the paper, and they were unreadable. For the introductory study I undertook a “restoration” of these eight images, facilitating the transcription of the music they preserved for the first time since the manuscript had been written.9

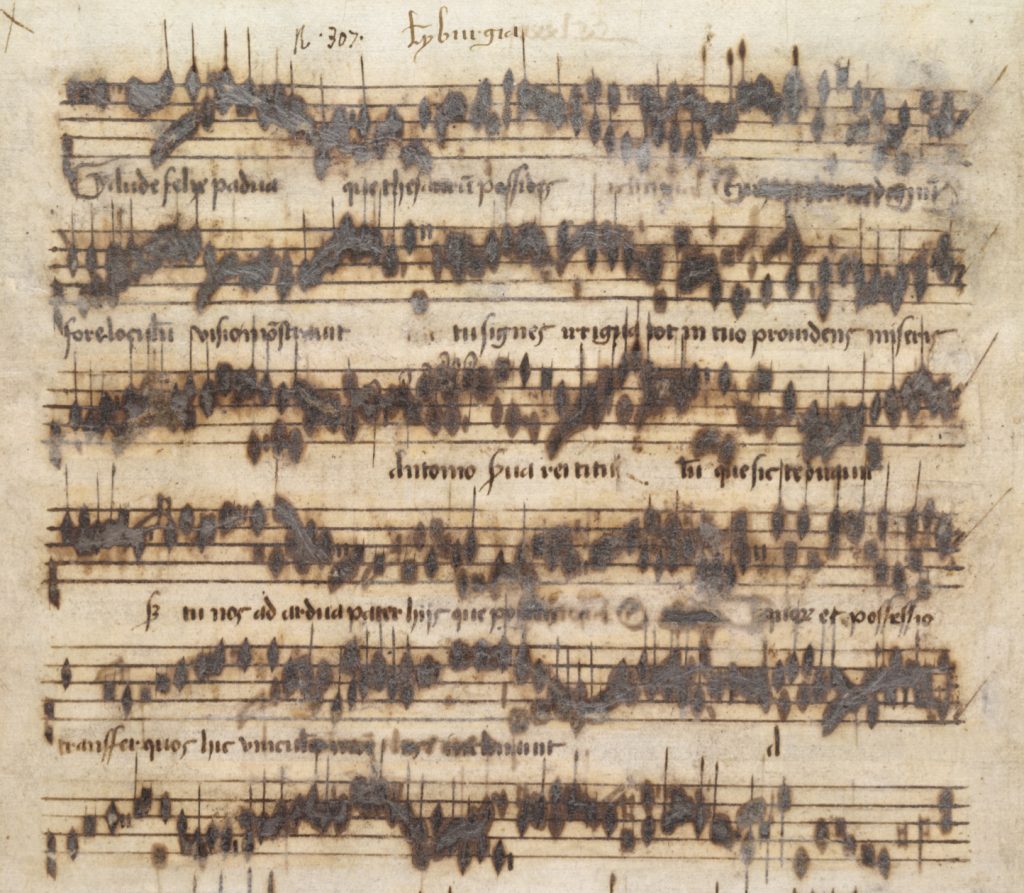

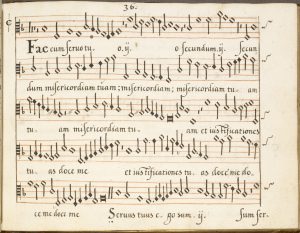

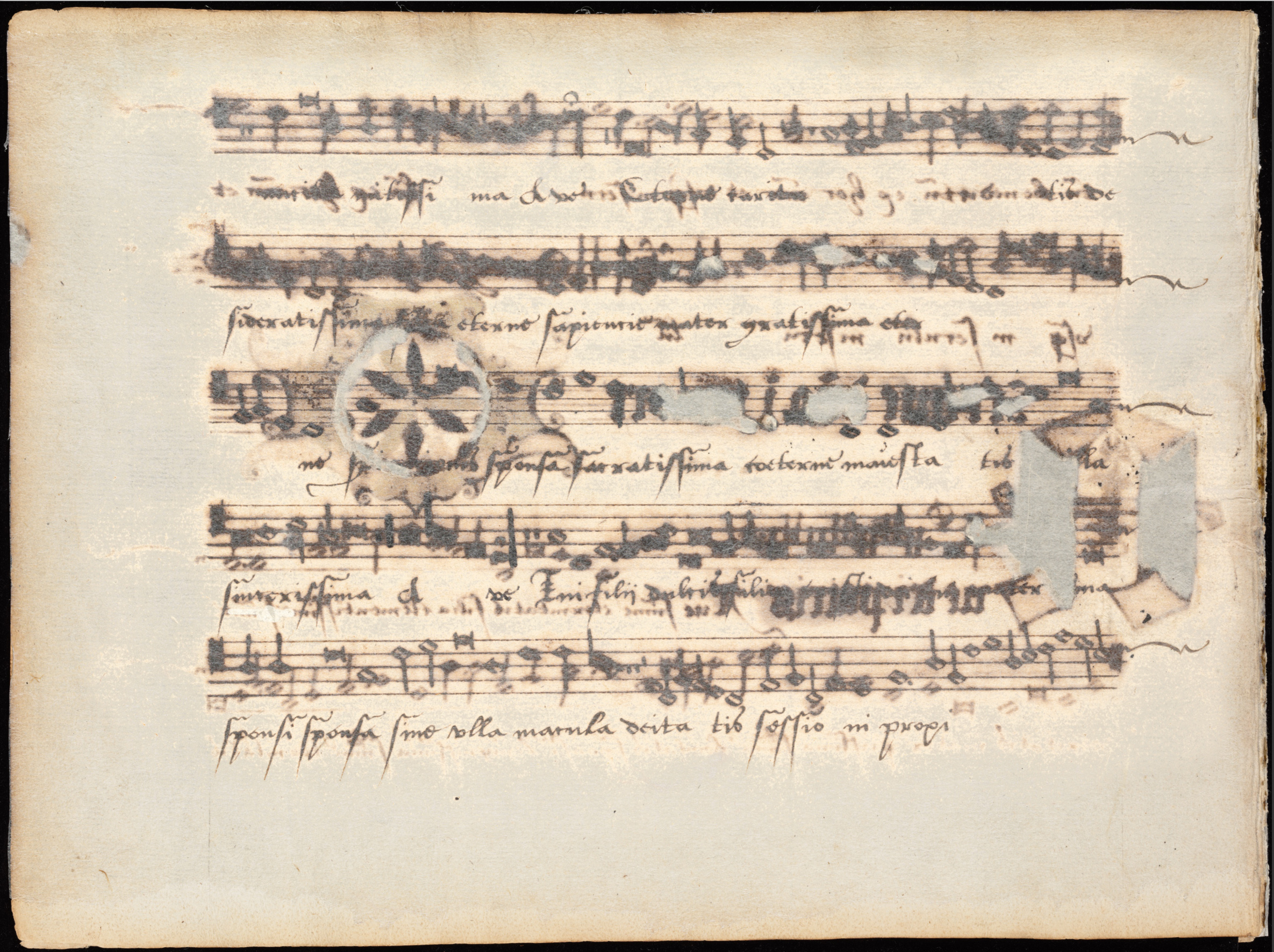

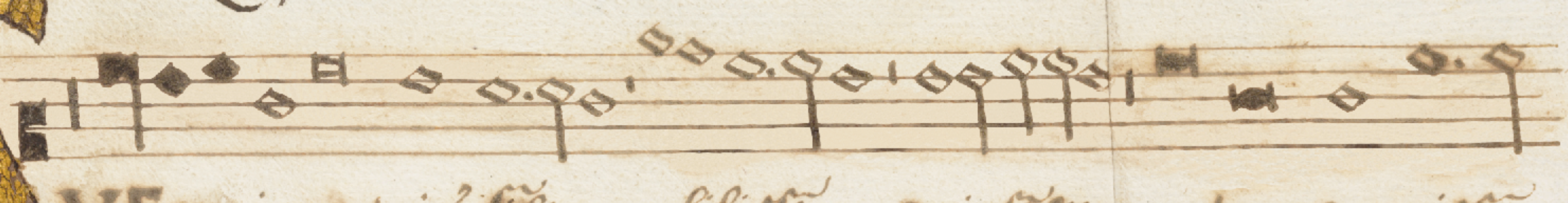

¶ 6Leave a comment on paragraph 6 0Figure 1. Bologna Q. 15 top part of f. 310v. Used with permission of Bologna, Museo Internazionale e Biblioteca della Musica.

¶ 8Leave a comment on paragraph 8 0

The work on Q15 prepared the ground for the Tudor Partbooks project, in which the set of five manuscripts in the Bodleian Library known collectively as the “Sadler partbooks”10—which had suffered similar damage—were repaired in the digital medium between 2014 and late 2017. The Sadler books presented a degree of reading difficulty similar to that of the damaged leaves of Q15, but a dramatic difference of scale, since instead of just eight there were 700 page images that would need work to make them fully legible.

¶ 9Leave a comment on paragraph 9 0

My goal in the case of the Q15 work had been solely to make the images clear enough for one expert reader to transcribe from them. We did not at first expect to reproduce the images for public scrutiny (the unedited images of the damaged pages were part of the facsimile), but the decision was taken to reproduce them in monochrome as an appendix to the introduction since they provided the evidence to support the newly transcribed compositions.11 The style of work therefore did not consider whether the result was aesthetically pleasing: it focused on eliminating the burn-through rather than restoring the page to visual parity with the rest of the MS. I wasn’t concerned either about what this would be called, though we had some discussion about the defensibility of the intervention necessary (that I cover elsewhere).12 “Restoration” seemed a reasonable term to use, since with the extremely high-resolution images I was able to zoom in to see very fine detail in the image and determine,13 by comparison, that both the fuzziness of the ink and relative color of (most of) the notes showed through from the back. I was also helped by note stems, which generally didn’t burn through, so stemmed notes were easy to identify, while the scribe’s consistency in drawing stem lengths often provided a clue to the pitch of the note to which the stem was attached.

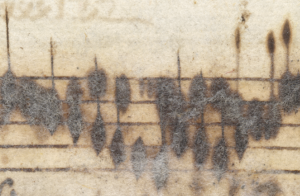

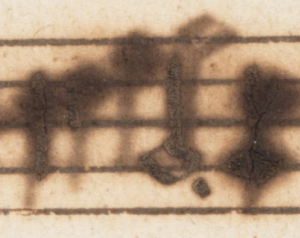

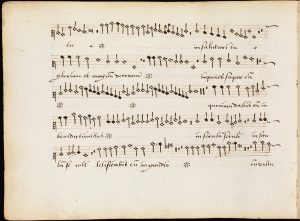

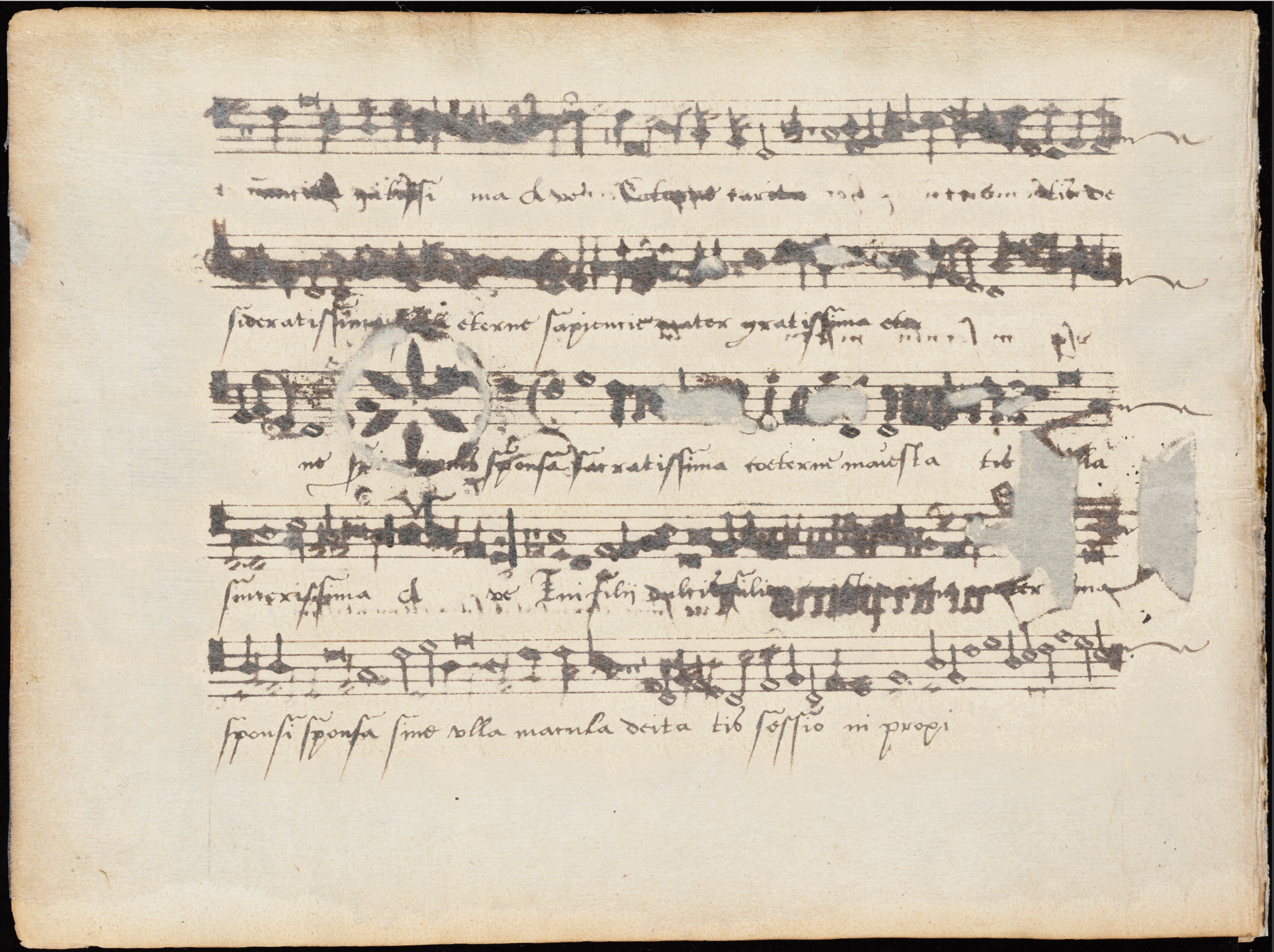

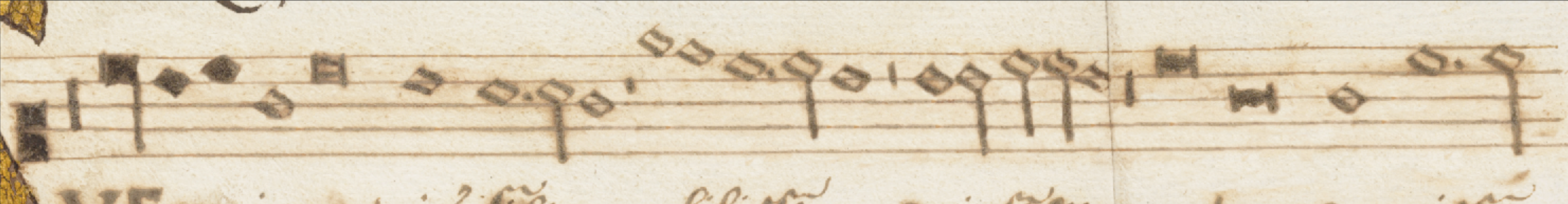

¶ 10Leave a comment on paragraph 10 0Figure 2. Bologna Q. 15, enlarged detail of f. 310v. Used with permission of Bologna, Museo Internazionale e Biblioteca della Musica.

¶ 12Leave a comment on paragraph 12 0

Apparent in this detail is the Japanese tissue overlay, which made it more difficult to differentiate between colors and edges under the repairs. Once I had eliminated the obvious interference from the reverse of the page, the remaining problems were much easier to resolve—either from context (the musical line was unlikely to make sudden leaps out of range, for example) or from spacing (which was reasonably regular in this hand), so I did not find myself having to create something from nothing at any point. I simply “tidied up” what became visible on close inspection.

¶ 13Leave a comment on paragraph 13 0

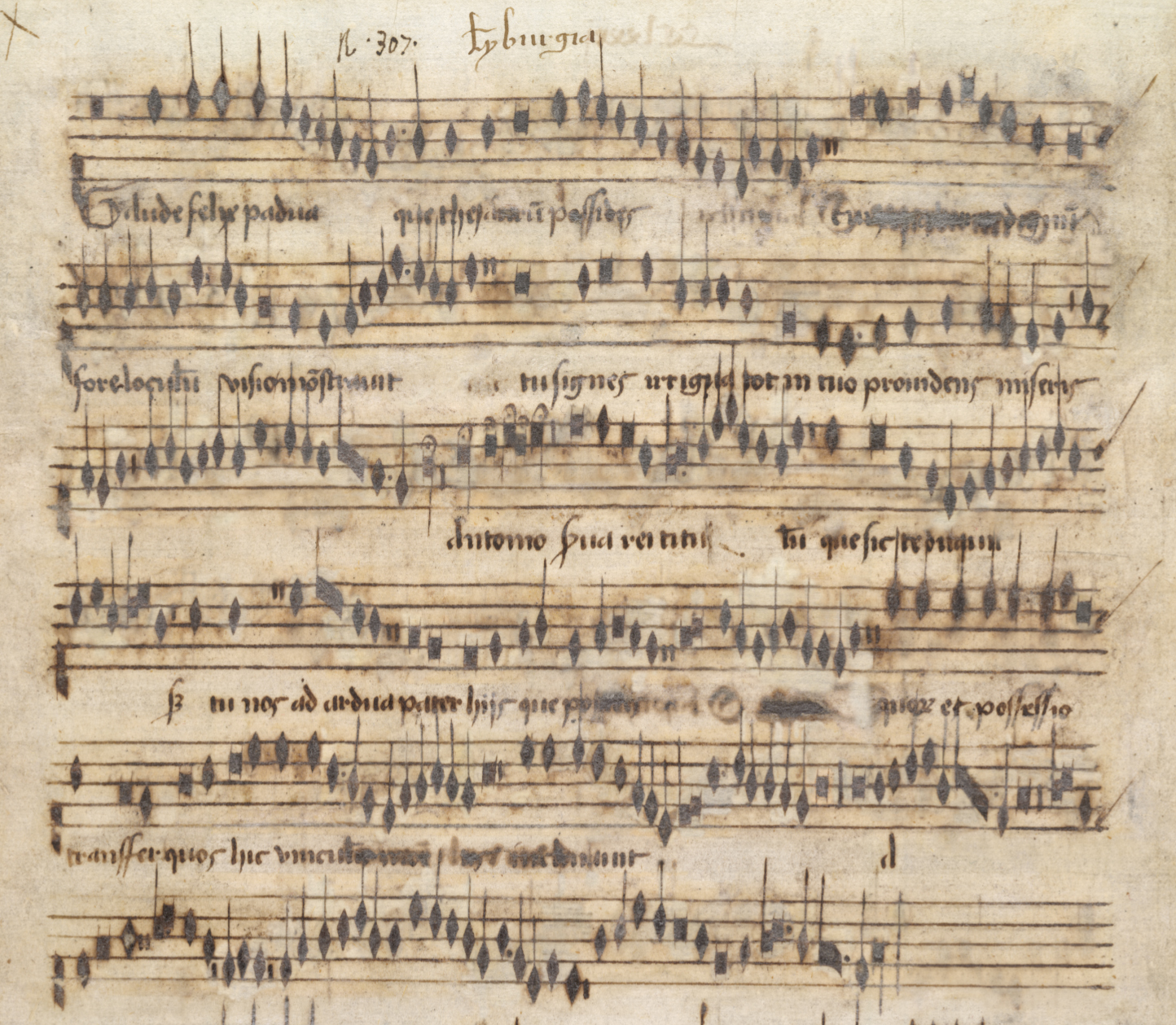

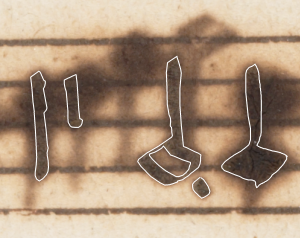

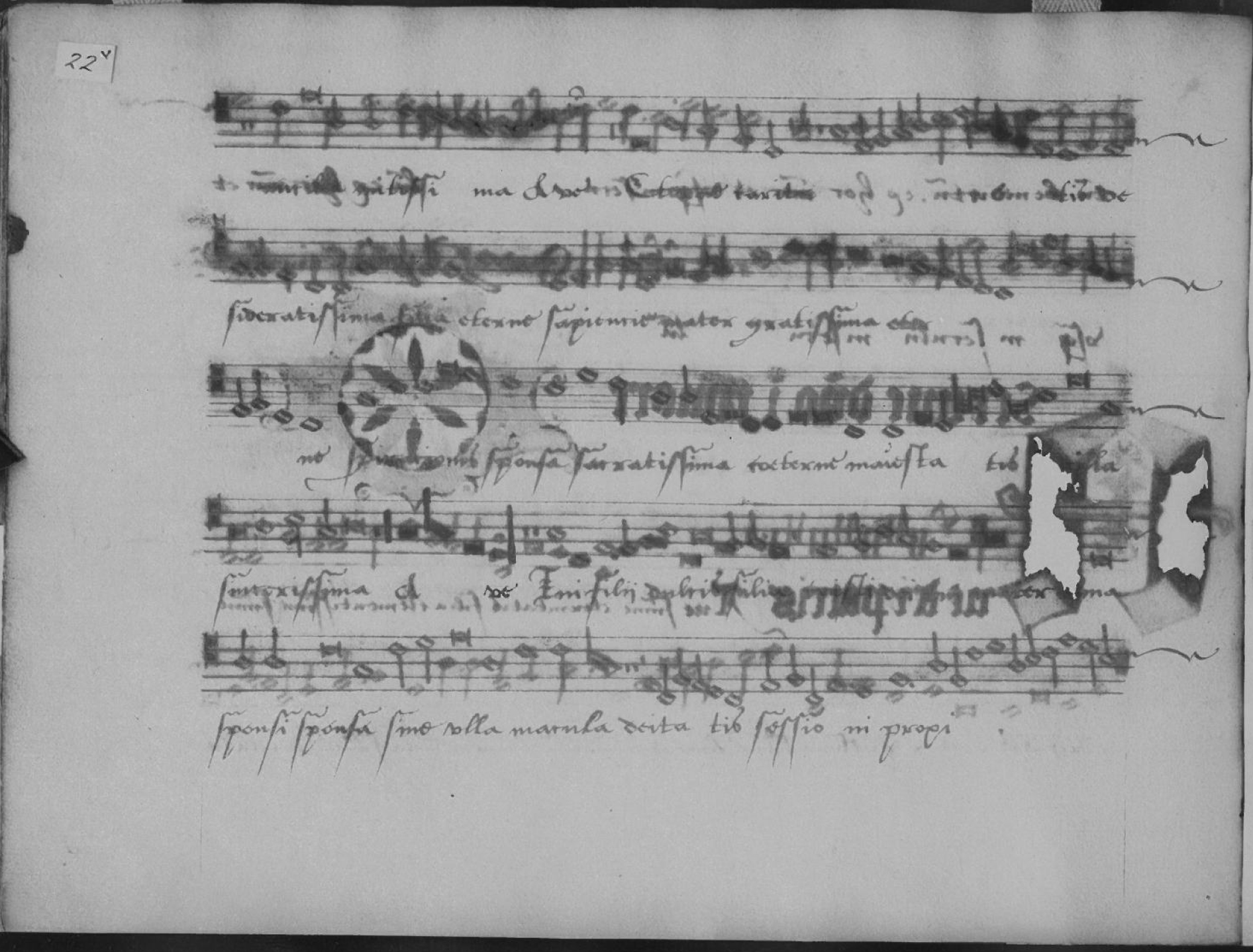

The restored sample (below) is, frankly, fairly ugly, but there is no question that it is readable; I did not need to improve the text underlay, since there was enough visible for the editor to establish what the words should be without my intervention. It does not, however, represent any iteration of the manuscript in reality, from its inception to today, nor does it represent how the manuscript might have looked had it not suffered the depredations of acid ink. The image editing allowed the lost works recorded in the damaged leaves to be recovered and an edition to be included in the introductory study to the facsimile. If I had the job to do over, and given enough time, I would take more trouble to clean around each note and smooth out the background to eliminate the visible shifts in color caused by the cloning process that was used to hide the show-through.14 I would also be more careful to align and match the color and width of the cloned parts of stave lines with uncloned sections (e.g., the lowest line of the second stave).

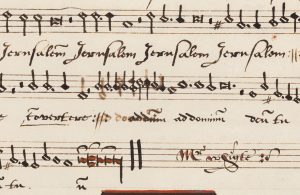

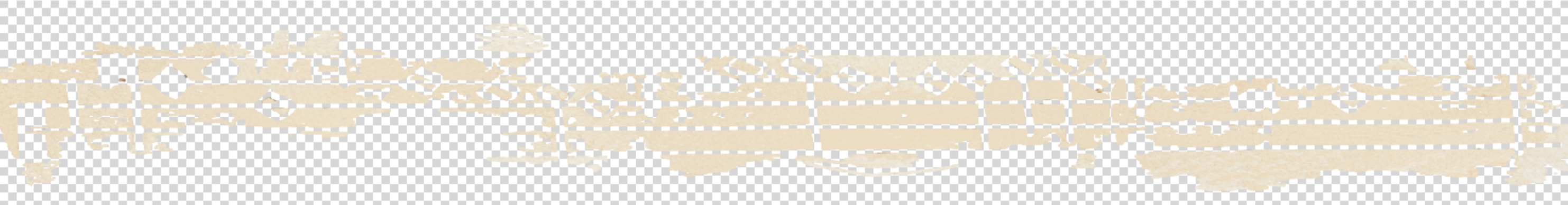

¶ 14Leave a comment on paragraph 14 0Figure 3. Bologna Q. 15, part of f. 310v digitally restored. Used with permission of Bologna, Museo Internazionale e Biblioteca della Musica.

¶ 16Leave a comment on paragraph 16 0

High-resolution imaging is an extremely useful tool since the facility to zoom in—enlarging well beyond what can be seen with the naked eye—coupled with the ease of handling when using images as opposed to the original source, allows readings that are almost impossible in the analog world. Color can be tweaked to emphasize differences in ink, but even so, reading images in a state like that of the MS in fig. 1 is a laborious process, and presenting the evidence of your findings to your peers almost impossible in print. There is no possibility of an unedited image being used for performance. Increasingly, musicians interested in this type of repertory want to perform directly from the source, since there are aspects of the notation that are lost or obfuscated in modern editions.15 The digitally restored image was in this case an ideal way to offer other readers and performers the information they needed, particularly if presented alongside the original, as they were in the Q15 facsimile.

The Problem

¶ 17Leave a comment on paragraph 17 0

The work on Q15 served to demonstrate that a great deal could be achieved to eliminate—or “repair”—the damage caused by heavy show-through and burn-through using high-resolution digital images. Scaling up to the much more extensive Sadler project (700 images in Sadler as opposed to eight in Q15), however, led to changes in methodology and approach that are informing follow-on project design.16

¶ 18Leave a comment on paragraph 18 0

The repertory of Tudor music surviving in partbooks is considerable, but relatively few of the surviving partbook sets are complete.17 The introduction of partbooks as a medium for notating and performing music resulted from the shift from the medieval choirbook (usually extremely large in format, with the voice parts distributed over one or more openings of the book), to the more intimate late medieval/early modern partbook in which individual voice parts were each copied into their own book. A set of partbooks might thus comprise four to six (and, as time moved on, up to ten) individual books, each containing a different voice part. Sometimes one or more of those books has been lost,18 rendering the remaining books almost useless since the music lacks an entire voice part and is thus unperformable.19

¶ 19Leave a comment on paragraph 19 0

To judge by the number of concordances between sources it seems little of the repertory is completely lost. Even missing partbooks can be covered, since a missing altus part in one set may be supplied from another containing the same work but that might be lacking the tenor. Even so, the loss of one of the very few surviving complete sets of partbooks to age-related damage has a very significant impact on access to the repertory of this period, and denies us a crucial witness to the domestic provincial musical activity of the period, when almost all of the surviving witnesses come from urban centers or institutions.

¶ 20Leave a comment on paragraph 20 0

John Sadler, a schoolmaster from Northamptonshire, is credited with the copying of the five Sadler partbooks, Oxford, Bodleian Library Mus.e.1–5, between about 1565 and 1585.20 After passing through the hands of various musicians and collectors, surviving a warehouse fire and other misadventures, they ended up in the Bodleian Library.21 Despite the excellent husbandry of the library, nothing could compensate for the poor materials originally used: by the early twentieth century, the acidity of both ink and paper had rendered the books largely unreadable, just at a time when musicology was rediscovering the music of the Tudors. They were withdrawn from access by the 1960s.22 They disintegrated further until de-acidification in the late 1970s and stabilization with Japanese tissue,23 which unfortunately made some of the most damaged pages even more difficult to read.

¶ 21Leave a comment on paragraph 21 0

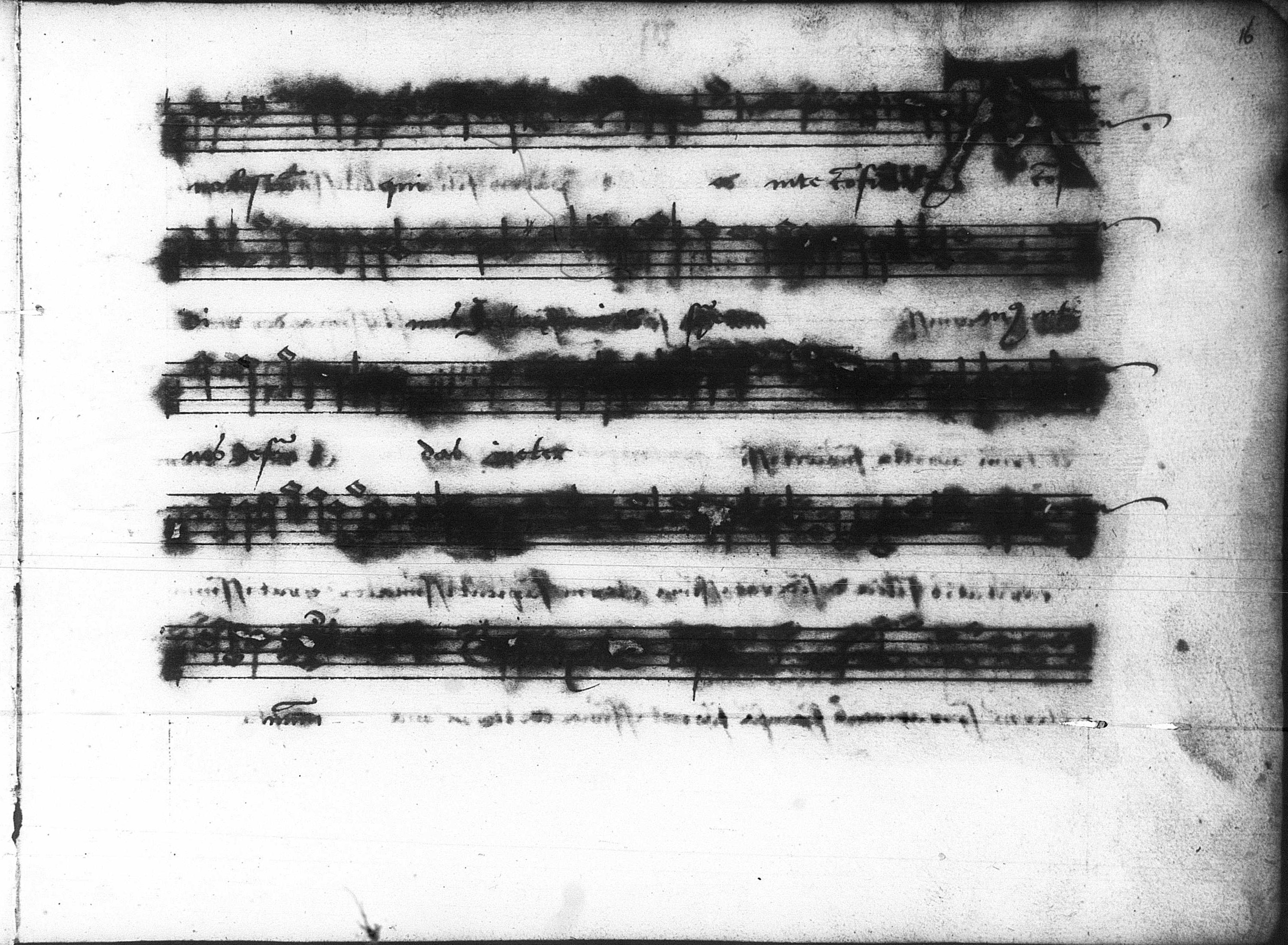

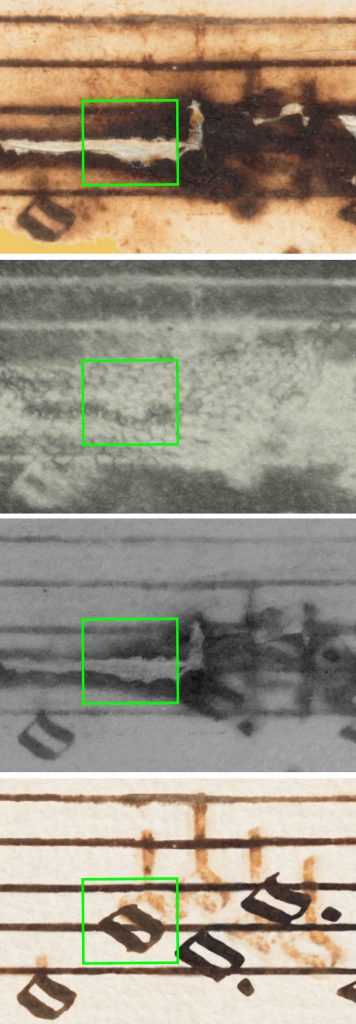

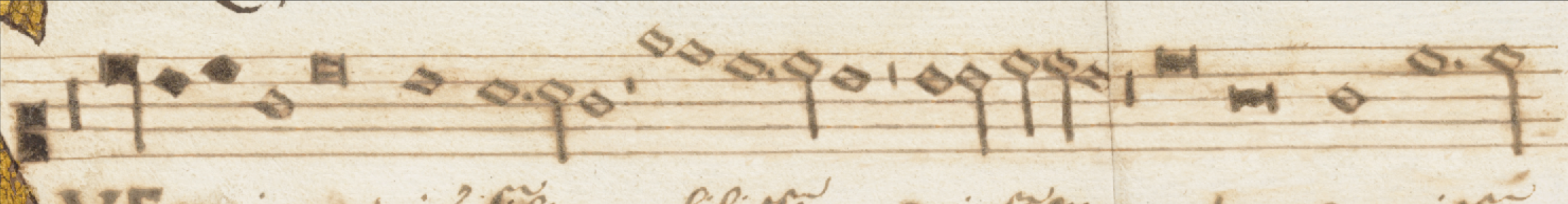

The conservation work halted the disintegration of the leaves, and left them disbound in wallets, allowing them to be microfilmed (but only in monochrome) so that scholars had access to some record of the books. The microfilm has therefore been the only point of access to the manuscripts for over fifty years but, as fig. 4 shows, is far from useful.

¶ 24Leave a comment on paragraph 24 0

The advent of digitization in color and at extremely high resolution created the opportunity to rescue these books from the obscurity into which they had fallen. The contents are not entirely unique, but they do represent music of singular importance: much of it is of a high quality, and its loss has a significant impact on our understanding of the repertory and activity of provincial musicians in the seventeenth century.

¶ 25Leave a comment on paragraph 25 0

The Tudor Partbooks project made use of the Digital Image Archive of Medieval Music (DIAMM) image-delivery mechanism, my own experience in the digital restoration of damaged manuscripts—up to this time mostly on medieval sources of music—and the technical expertise of a group of musicologists to embark on an unusual task: the reconstruction of two sets of important partbooks: Oxford, Christ Church Mus. 979–83, the Baldwin partbooks, lacking the tenor book (which is being reconstructed by the musicology team led from the University of Newcastle), and the Sadler partbooks, presently unreadable (reconstructed by the digital restoration team based in Oxford).24 This paper is concerned with the digital reconstruction of the Sadler books.

¶ 26Leave a comment on paragraph 26 0

The intended destination for both reconstructions is a color print reproduction of each partbook set, with zoomable high-resolution images (both restored and unrestored) available online through DIAMM, which are in progress. The print outputs, also in progress, are aimed at both academic users and musicians who would perform or transcribe directly from the books, but there is also a significant nonmusicology market that makes paper publication financially feasible.25 The commitment to a print output, and the production cost of a short-run in color, means that the end result is going to have a much wider readership, with much more varied expectations and needs than the Q15 images, which were for the use of a single scholar. Therefore, the result needs to look reasonably pleasing, not simply legible, and ideally we want the style of the Sadler reproduction to represent the books in a form in which they might have appeared at some time in their life (before the acid degradation took hold), or how they might appear today had the materials used in their creation been less destructive.

¶ 27Leave a comment on paragraph 27 0

The Bodleian Library was able to digitize the leaves at the high resolution required for restoration work, and a brief glance at the color version of the same page shown in the microfilm in fig. 4 demonstrates the vast difference in the quality of information provided by the two reproduction methods.

¶ 30Leave a comment on paragraph 30 0

With the complete images finally in our hands, we realized the task was far larger than we had anticipated: of the 774 page images, at least 700 would require some intervention to be usable for performance, and at least 600 of those would require major work, ranging from six to twelve hours per image using the methods I had piloted with Q15. Although we had some idea of the level of damage before we started, there were significant differences between the Q15 work and this (most that only became manifest as we embarked on the work):

With effort, most of the notes could be deciphered, but we needed to do more than make them decipherable since these books should be usable for performers, not simply transcription.

The state of the manuscripts was worse than Q15: a few pages had burned through to the extent that there was lacing and holes—some very large—where decoration had burned right through the paper.

The intended final output for the Sadler books was a color reproduction of the five books in readable form and in their entirety.

The problems of readability (and thus restorability) were compounded by tissue overlay on the worst pages: on un-tissued pages it was usually possible to decipher the difference between surface writing and show-through by looking for crystalline deposits on the surface ink, but tissue overlay hides any crystals and almost completely cancels out the difference in color and fuzziness between show-through and surface writing, visible in fig. 6 below.

¶ 36Leave a comment on paragraph 36 0

We first realized the potential for volunteer restorers to increase our output after a brief talk to a group of amateur singers on a facsimile-singing day. They were fascinated by what we were trying to do, and several of them immediately offered to help. The volunteers who came forward were both prospective end users: musicologists, viol and recorder players, singers, amateur and professional musicians of every type; and sometimes just people who were interested in solving a different kind of puzzle or problem.

¶ 37Leave a comment on paragraph 37 0

By the time the volunteer system got rolling we had some fifty volunteer editors working on the images, along with three team members. When it came to the crunch, though, many volunteers dropped out after attempting a single image, the reason being that the fine-control of the mouse necessary for the work was beyond the majority of users. Funding prevented us from supplying them with graphics tablets. Nine volunteers contributed significantly, though, and there is no doubt that the project would not have been completed on time without them. The use of volunteer restorers, as well as the simple numbers of images, informed the decisions we made about the type of improvement to the images that was possible, since we were constrained not only by time but also by the varied skill level of our team. Many of the volunteers were able to come to Oxford for a couple of hours of training and to try the cloning work for themselves. More distant volunteers used training videos distributed through the project website (www.tudorpartbooks.ac.uk) and YouTube.26 A time-lapse restoration (two hours and twenty minutes reduced to eight minutes) is included. It shows the entire process from original image through to finished restoration.

¶ 38Leave a comment on paragraph 38 0

Only a few of the volunteers had used image-processing software before, and none had attempted work of this type, so they were receptive both to instruction and to (tactful) critical appraisal of their work. A package of “starter” materials, including a glossary of note, extra-musical and letter shapes (for those who had never read secretary hand), and a baseline image were given to each volunteer so that they had visual materials as well as the face-to-face training and online tutorials. Usually the volunteer was given a fairly easy image to start with; they would work on a single line and then return the image to the in-house team for comments via an online shared file system. Comments could be emailed back using pdfs with screenshot samples. The first line of work would usually indicate to the team the type of editing that would be most suited to the volunteer’s skills, allowing us to match images to the people involved. Each image that came back was checked and commented on. Minor errors were simply tacitly fixed by the in-house team.

Forensic Reconstruction

¶ 39Leave a comment on paragraph 39 0

The work on the books began with the term “restoration” in mind, but we could not “restore” areas of a page without an accurate picture of what those areas might originally have looked like. Though much of the writing—both music and text —was discernible and could indeed be restored, areas of severe lacing, large holes, or solid dark patches could only be conjectured based on the shape, size, and position of the patches, albeit with a fair degree of certainty thanks to the relatively predictable musical vocabulary of the time and by transcribing all the parts (see below in the sections titled “Extrapolation” and “Contemporary Concordances”). Although we could restore the original pitches and values, we were not able to restore the true appearance of the lost areas of the page, only an approximation. A more accurate generic term for the work that would allow for the replacement of these missing segments with something approximating their original appearance would be “reconstruction,” and the “restorers” should be more accurately referred to as “editors.”27

¶ 40Leave a comment on paragraph 40 0

Because of the lacunae in the pages, the process required us to look beyond the images immediately to hand in order to build a reconstructed page. In this respect we were fortunate to have a number of sources of information to draw upon.

Sources of Information

Master High-Resolution RGB Images

¶ 41Leave a comment on paragraph 41 0

The new digital images were of a color depth that allowed our image-processing software to determine color separation where the naked eye could not. Their high resolution meant that the pages could be enlarged so that very fine detail of the text could be seen that would not have been visible if examining the original leaves. These images were the primary source of information. Reading approximately 90% of the text from these pages was possible, given enough time to disentangle it.

TCM Photostats

¶ 42Leave a comment on paragraph 42 0

In the early 1920s, the Carnegie Trust made a grant to the editors of the Tudor Church Music (TCM) series in order to make negative photostats of some pages of the Sadler books that were required for making editions of works in the series. The black and white photostats survive in Senate House Library in London.28 The photostats show the manuscript in a notably different state to its current one: the decay is at an earlier stage, so even when show-through is apparent, it is easier to differentiate between the surface writing and that of the reverse. Some of the holes are considerably smaller, but a few areas are more difficult to read as they are obscured by gauze that was apparently lifted in the 1970s when the manuscripts were conserved, microfilmed, and officially withdrawn from access (though we know that they had not been accessible for over a decade before that)

Infrared Images

¶ 43Leave a comment on paragraph 43 0

There aren’t many of these, and they were done toward the end of the project, mainly to confirm readings that we could neither extrapolate from what was left, nor read from the photostats or the master images. We had to limit the number of additional images because of the fragility of the documents, even after conservation and stabilization with Japanese tissue. The infrared images were, however, extremely revealing, not only in questioned readings but also in confirming what we had suspected about ink types. Since the infrared results were so good there was no need to investigate multispectral imaging, though that option was in any case not available because access to the original leaves is so limited.

Global Adjustment Versions (not based on color selection)

¶ 44Leave a comment on paragraph 44 0

There are types of manipulation that can be applied to digital images that improve their readability (if not their appearance) by separating out colors that are too close for differentiation by the naked eye, and increasing the difference until it becomes visible. In many cases, pages that looked at first to be intractably illegible gained sometimes as much as an 80% improvement in readability through applying global processes such as thresholding, level and color-balance or contrast adjustment, and the like to the master images—a process that was only useful because of the high resolution of the masters, which preserved a very high level of color separation. Even so, the results still required painstaking attention to make it possible to understand the notation, but these globally adjusted images provided us with another level of information.

Extrapolation

¶ 45Leave a comment on paragraph 45 0

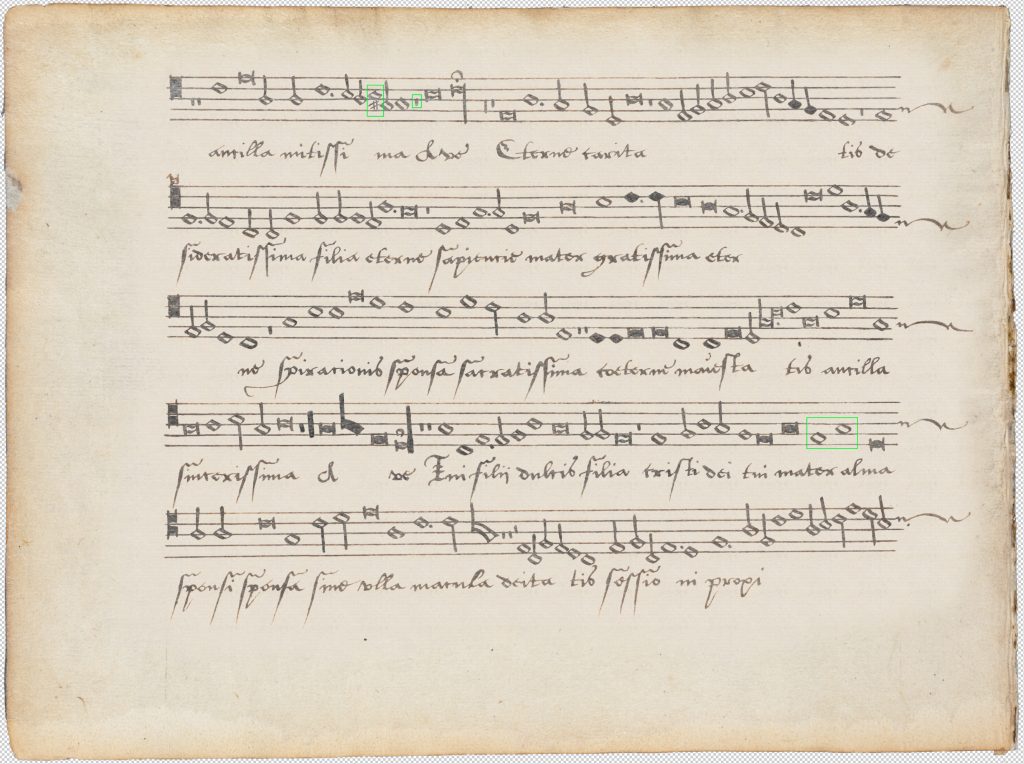

In many cases, a note could not be convincingly retrieved precisely, but it was possible to deduce both value and pitch from the visible context, so the missing note could be replaced by cloning one of the correct pitch and value from a cleaner part of the page. In a string of minims, for example, a single missing notehead could be deduced in value from positioning (unlikely to be an isolated semiminim) and in pitch from the length of the tail and from having eliminated pitches that were visibly clear of notes. An example from Aston’s Te Deum (Mus.e.4 12v):

¶ 47Leave a comment on paragraph 47 0

The boxed note must be on the second stave-line up (and is not in either of the vertically adjacent spaces); it has no tail (though there are clearly tails erased above the notes—the erasure of the noteheads originally mis-copied, here having contributed to the damaged area as the paper was scratched thinner and two layers of ink were applied on this side), so must be a void breve (square note) or semibreve (diamond note; the context does not allow for a colored (solid black) note). The photostat contributes nothing to the reading because of the gauze overlay. The infrared image confirms the two diamond notes following the boxed note, and it also confirms the absence of vertical downstrokes necessary if the missing note is a breve. The missing note can therefore only be a diamond semibreve on the second line up. The value of the note is confirmed by transcription of all parts, since any other value renders the polyphony incorrect.

Contemporary Concordances

¶ 48Leave a comment on paragraph 48 0

These were more difficult sources for information, as they were outside the confines of the Sadler books themselves. Variants between concordant copies of the same work are not uncommon between contemporary sources. The Baldwin and Dow partbooks however—and particularly Baldwin—provided readings that were close enough to the complete music that their contribution was valid,29 although of course Baldwin is lacking its tenor book, so was no help in working on Mus.e.4, the tenor book in the Sadler set.

¶ 49Leave a comment on paragraph 49 0

The primary usefulness of the concordant sources—as for the modern editions—was in pointing us in the right direction when a reading was difficult or dubious.

Modern Editions

¶ 50Leave a comment on paragraph 50 0

In only one case did we have to refer to an edition in which the relevant part had been musically reconstructed, but in fact that leaf, thanks to the use of infrared imaging, ended up showing that the reconstruction was musically slightly better than the original.

¶ 51Leave a comment on paragraph 51 0

All areas of the process have had to evolve, from decisions about the overall policy with respect to the original documents to the actual reconstruction techniques we are using. Although ideally we would base all decisions on loyalty to the original manuscript and aesthetic result, the reality of time and funding has meant that the simple time versus result equation has had a major bearing on decisions about methodology and the final appearance that we were aiming for.

¶ 52Leave a comment on paragraph 52 0

Our first decision in editing the images was one we had to some extent anticipated. We asked: Should we attempt to create in the images a state the manuscripts might have been in when they were written, or should we aim for something that would approximate the way these manuscripts would look today had they been prepared using better-quality materials? The latter choice prevailed, partly because we could never know how the books looked on the day they were written. We have many models before us of how they might have looked today from other contemporary partbook sets (fig. 8).

¶ 54Leave a comment on paragraph 54 0

However, considerably more relevant are the few pages from the Sadler books that have not succumbed to acidity (fig. 9).

¶ 56Leave a comment on paragraph 56 0

The next question was how far we should intervene in these images. We asked: Should we simply leave unsightly patches if they do not interfere with the reading of the musical text?

¶ 58Leave a comment on paragraph 58 0

The problem with leaving the page shown in fig. 10 as it is, is rendered manifest when it is placed in proximity to a page that has been repaired. The repaired page will look very close in quality to the baseline image, leaving this untouched page inexplicably messy. Parity between images has therefore become part of the equation: our original rule of thumb was that we should make the pages look as they would today if Sadler had not used such acidic ink (using undamaged pages from his books as a baseline), and that rule applies to all the pages, not just some. All show-through needs to be eliminated, not simply that which makes it impossible to read the music. That answered the question of whether or not to intervene with pages like 49r (fig.10), but it also meant that we had to find a way to remove bleed that caused fuzziness around notes (not evident on non-acid pages). We also had to repair/replace the holes, and work out what notes should go in those gaps.

¶ 59Leave a comment on paragraph 59 0

The rule of thumb, however, meant that we should not correct or interfere with Sadler’s copying errors.

¶ 61Leave a comment on paragraph 61 0

Folio 43r (fig. 11) needs no restoration: the apparent confusion on the middle line of this detail arises from the scribe copying the wrong notes (and words), blotting them out, and recopying the correct music over the top. This type of damage, not occasioned by acidity, was not removed, since it elucidates the copying process and may give clues to the exemplar for these books. Nor should we have straightened out the bump in the top line, where the copyist apparently left his finger protruding above the ruler when he drew the line, or eliminated the red ink splatter at the top right beneath the stave line.

Processes

¶ 62Leave a comment on paragraph 62 0

A detailed text description of such a highly visual process is laborious, and probably unnecessarily technical, particularly to readers unfamiliar with image-processing tools. The processes are best demonstrated visually, and those interested in learning more about the Adobe Photoshop tools are directed to the online training videos available on YouTube.30 The process will be described in more detail in the introduction to the published version of the images.

¶ 63Leave a comment on paragraph 63 0

The primary process for the reconstruction of Q15 was cloning, in which a clean part of the page is copied with a feathered edge over a part where there is show-through, seamlessly replacing “bad” areas with “good.” This can be implemented both by copying targeted clean areas over areas needing repair, and by creating a pattern fill from a clean part of the leaf and “painting” this over damaged parts. The first barrier to cloning that we encountered was the scribe’s failure to use a rastrum to rule the stave lines in the Sadler books. Instead of the staves being ruled with a fixed-width multinibbed pen, as was commonplace at this time (if printed music paper wasn’t used), each line was hand ruled, so the spacing is irregular and the lines are often not even parallel. Attempting to clone a good part of the stave over a bad one further along the line required multiple adjustments between target area and clone point.

¶ 64Leave a comment on paragraph 64 0

An early technique we employed was to use a selective-color level adjust, which could fade back (but rarely completely fade out) much of the rust-colored bleed (haloing) and show-through. The problem with this approach was that level-adjusted areas rarely gave the restorer sufficient choices for clean areas from which to clone on heavily damaged leaves. Although the level adjust could greatly clarify the difference between show-through and surface writing, it still left considerable cloning work necessary to rescue the page entirely.

¶ 65Leave a comment on paragraph 65 0

The amount of surface haloing on some pages was also problematic: notes were fuzzy, and where they were close together, they could end up bleeding into one another. If we didn’t remove the fuzziness there was a notable disparity between these pages and those where the notes had not bled. Carefully cloning around the edges of all the notes was out of the question as it would take far too long. It also ran the risk of introducing an artificial sharpness to the pen stroke and would falsify or obscure information about pen strokes that is relevant to the identity of the scribe(s), but level adjustment on the bleed color left the notes with a noticeable corona.

¶ 66Leave a comment on paragraph 66 0

We had questioned at first whether we should retain the darkened paper color between the stave lines, caused by bleed from the stave-line ruling. The bleed made cloning difficult, as we had to match the background color of the notes to the varying tones of the background of the paper. Yet it was time-consuming to remove through cloning alone and was not effectively removed by a level adjust when darker in tone.

¶ 67Leave a comment on paragraph 67 0

The change in terminology from “restoration” to “reconstruction” was liberating, since it allowed us to take a more aggressive approach than we had previously, cleaning away all of the show-through with color selects and pattern fills—a tactic that speeded up the work since we could automate more of the initial recovery by scripting a series of color selects that could be selectively “turned off” if they took out too much information. This was essential in making the project feasible.

¶ 68Leave a comment on paragraph 68 0

Having worked through some forty images using level adjust as our first line of attack, the amount of time required for the residual cloning, and the unhappy realization that the project might never be completed if we used this method, resulted in a switch to a more aggressive approach: instead of level adjusting (which helped, but nevertheless left a great deal of cloning to be done) we would take the same color selection and use a pattern fill instead. The patterns were created from clean areas of the page and replaced the selected areas of show-through completely, rather than just fading them. The benefit was immediately visible: whole areas of the staves would no longer require cloning, and the background paper color and texture was much more uniform. The downside was that because the stave ruling was generally much lighter than the notes, we tended to eliminate a lot of the stave lines along with the show-through. With the level adjust the stave lines became paler but were still visible.

¶ 69Leave a comment on paragraph 69 0

The following image is one of the most problematic in the collection, with tissue overlay, holes where the ink has burned through and areas of paper have been lost, and clustered notes so close that it is almost impossible to tell how many notes should be in each of the burned patches. Only the lowest line is fully decipherable.

¶ 74Leave a comment on paragraph 74 0

One problem with level adjustment is the “fluorescence” it introduces. The only way to avoid this was to tweak the individual color channels, which minimized the effect of the adjustment, rather defeating the object. The end result was not terribly useful, just brighter (and in places unpleasantly so). This was not a desirable finish for a print output, though it could be used for an online reference alongside the original images. On this image at least, the aesthetic result of the level adjust is really not acceptable, and barely helpful.

¶ 76Leave a comment on paragraph 76 0

After color-select pattern filling there was still a considerable amount of work to be done on this image, but four advantages over the level-adjusted image were immediately apparent: the image remained a realistic color; quite large areas were effectively already repaired and would not require any cloning, mainly in the text show-through; the rusty background to the staves had been eliminated, resulting in an image that matched the baseline image more closely; more of the text had emerged from the background noise of the show/burn-through. The background to the staves now had clean areas that could be used to clone over the damaged areas. Not visible at this size is that the pattern fill retains paper texture in the repair because it was created from a clean area of the page. The level adjust tends to flatten out fine detail like paper texture, increasing the artificial appearance of the edited image.

¶ 77Leave a comment on paragraph 77 0

It was abundantly clear that many of the notes on this page simply could not be “restored” at this stage—even if we knew what they are meant to be—because their original shape was lost or obscured. At this point we took the digital scans of the TCM photostats and superimposed them on the image as an additional layer. There was sometimes considerable distortion to deal with in importing the photostat scans to the master images; there had been shrinkage and other distortion to the original leaves in the intervening decades, and the photostats were also wrinkled and beginning to show their age. However, with some manipulation, the photostat scan could be superimposed almost exactly on the master color image, and the opacity of this new layer turned up or down to allow the editor to work out the correct shape and position of the original text, thus in this case elucidating many of the notes that were not visible on the master RGB image.

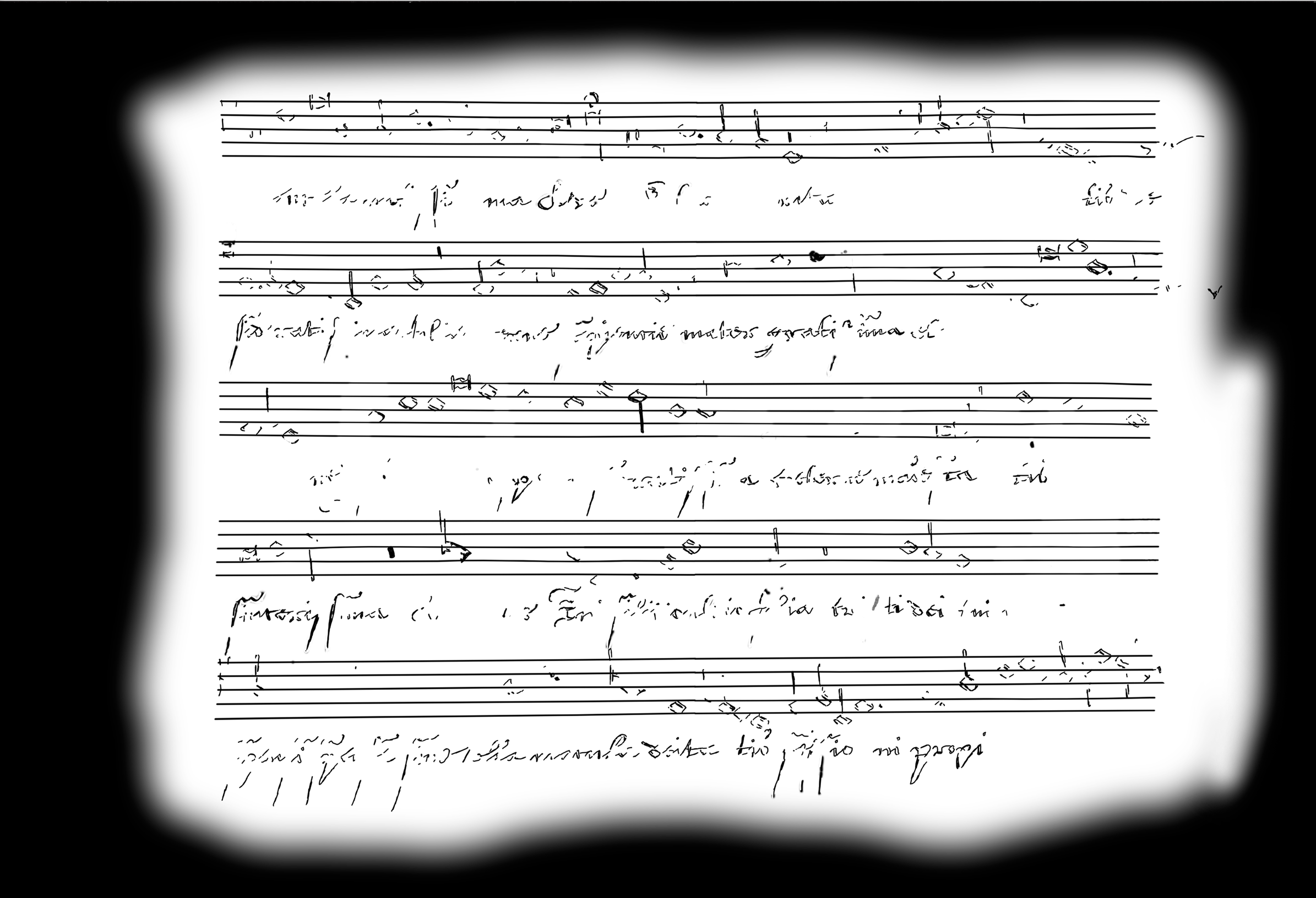

¶ 78Leave a comment on paragraph 78 0Figure 15. Mus.e.3, f. 22v, negative photostat. For the purposes of this reproduction, the scan has been inverted to positive, as the negative version yielded a very black result in print. Courtesy of Senate House Library, University of London.

¶ 79Leave a comment on paragraph 79 0

There was some experimentation with using the photostat overlay as an interference filter, but this was unhelpful partly because it was extremely difficult (i.e., time-consuming) to line up every note accurately, but also because photostat was also not free of show-through damage. Had these surrogates been made before the manuscript had deteriorated to such an extent, then using them as a filter overlay might have been more successful.

¶ 80Leave a comment on paragraph 80 0

The photostats are an extremely valuable record of the deterioration of the manuscripts over the last century. The deterioration is particularly apparent in the rectangular holes left by the initial A on the reverse: in the photostat these holes are smaller, though dark from burning, but had apparently crumbled away by the 1970s. In the middle line, there are now several holes where there had been complete paper before. Unfortunately, only about half of the leaves in the books are recorded by the photostats, but what we have was enormously useful in the reconstruction process, since if we could see the notes on the photostats we could reconstruct them on the color images with confidence.

¶ 81Leave a comment on paragraph 81 0

Cloning is extremely time-consuming, and when cloned areas start to intrude on the note-heads themselves, not simply the areas of show-through around and between them, then the process is on much more questionable ground. With our mixed and dispersed workforce, we also faced disparities in decisions about cloning that meant a volunteer could completely change the shape of a notehead or stem, even though the correct pitch and value were retained, leaving the in-house team with time-consuming tidying up to do.

¶ 82Leave a comment on paragraph 82 0

This led to a further evolution in the process, which allowed us to eliminate cloning as a repair strategy almost completely. Some of the stave-line detail was lost to the pattern fills; so also were many of the finer lines in the writing: otiose strokes on the letters and the “flick” at the initiation or termination of the pen stroke on the noteheads would often disappear. A mask was therefore placed over the pattern-filled layers, and the missing stave lines and pen-strokes were manually eliminated from the fills and thus brought back to the image.

¶ 84Leave a comment on paragraph 84 0

As seen in fig. 13, there remained considerable show-through and burn-through to eliminate, but having created a mask, a quick solution was to create a blank pattern-fill layer, populated entirely with the paper pattern, and use that layer to “paint” a paper pattern over the remaining show-through on the page (nicknamed “quick clean”). Eliminating large areas of burn-through this way was very fast, as the stave mask meant the restorer could draw sweeping strokes through the stave without wiping out the stave lines and did not need to find clean areas for cloning targets.

¶ 85Leave a comment on paragraph 85 0

There is something of a gray area in the relative amounts of time required by masking or painting: is it quicker to “undelete” the lost strokes than to step back to the previous pattern fill and just do more quick cleaning? I tried both methods on an image with relatively light damage: restoring the missing strokes in the underlay and music took 8.5 minutes, while cloning the parts of the show-through that had been removed by that fill took about 9.5 minutes. Not much difference in this case, but on a more damaged page that gap would widen. Talking with our active editors, their feeling was that even if there was little difference in the time it took, the process of bringing back lost strokes seemed quicker because it required marginally less fine motor control than quick cleaning, and therefore gave the perception of being easier which, in a job as extensive and potentially tedious as this was, was an important distinction. Experience enabled restorers to judge whether the more extreme fills helped or hindered their work, and because the fills were created in multiple layers, editors could selectively use or turn off fill layers until they reached a result with which they felt happy. Certainly, with more damaged pages an aggressive pattern fill could save considerable time.

¶ 86Leave a comment on paragraph 86 0

Once the gross damage had been eliminated, the image was zoomed in as closely as possible, the brush size reduced, and any remaining show-through was removed by painting carefully around the shape of the original pen strokes. Saving the work in layers ensured errors in restoration could be fixed easily.

¶ 90Leave a comment on paragraph 90 0

Although this appears to be a large number of notes, all but two of them were created by drawing around the shapes visible in the photostat, so are reliable reproductions of the original notation. The text underlay was heavily damaged but there was no doubt about what it should be, its positioning, or angulation and basic form of the letter shapes, so the words or individual letters were cloned from elsewhere on the page. What could not be seen on the photostat had to be deduced from context and concordance, and is indicated on the final image boxed in green (see fig. 19).

¶ 91Leave a comment on paragraph 91 0

After much consultation about ways in which to indicate which notes had been rebuilt rather than tidied up,31 I made an executive decision that a simple fine-line green box was the best solution for the color reproductions: it is immediately clear to the reader that these notes are singled out (and the preface gives a clear explanation for the boxed notes); the color appears nowhere else in the books and cannot be mistaken for anything other than an editorial overlay. Niceties such as drop shadows were abandoned because they might interfere with adjacent notes.

¶ 94Leave a comment on paragraph 94 0

An entirely cloned repair could take up to twelve hours, but a reconstruction of the same page using the pattern-fill/mask/quick-clean method could take three to four hours and would result in a completely readable and thus performable page image. The ethics of the process are discussed in the introduction to the publication of the Sadler images and have been the subject of papers by this author in the past.32

¶ 95Leave a comment on paragraph 95 0

The writing in the Sadler books (both text and music), although fairly consistent to a casual glance, is actually quite varied; spacing of the notes is irregular, as the scribes take care to align the notes with the words, so sometimes notes are elongated and crushed together, and at other times they are widely spaced.33 In heavily damaged areas this can often make it extremely difficult to discern even how many notes we were trying to recover, never mind their pitches and values. We were superficially dealing with a relatively limited vocabulary of note shapes. That vocabulary, however, turned out to be subtler than expected at the outset.

¶ 96Leave a comment on paragraph 96 0

Quite early in the editing process, the reconstruction team started to notice disparities in the way that notes were constructed that they would not have expected in the work of a single scribe. Carefully drawing around every pen stroke meant that the notation was examined in an extremely intimate level of detail, resulting in the discovery that these books were not by any means the work of a single scribe, as had been the assumption since they were first described by Edmund Fellowes.34 His assertion that they (and the Wilmott and Braikenridge manuscripts) were in the hand of John Sadler has never, until now, been questioned. The parts of the text most easily eliminated by the scripted pattern fills were the most illuminating, since it was in the light flicks of the pen and the way in which heavy lines were joined by lighter strokes that the habits of different scribes emerged, as well as the way in which the noteheads were constructed. This is not the place to elucidate the scribal practices in the books, which are complex and require considerable exegesis, but this is an important by-product of the editing work.35

¶ 98Leave a comment on paragraph 98 0

One extremely important question asked of images that have received this kind of editing regards the codicological use to which the resulting images can be put.36 Can they be considered reliable witnesses of the original source, or is their content too compromised by the reconstruction methods? If we had espoused the cloning method to repair the pen strokes, then I think our results would have had questionable value (at best) codicologically. However, the care with which the original pen strokes have been retained (care that unfortunately knocked many of our volunteers out of the game) means that the edited images can be used realistically—preferably alongside the original images that are available online—to examine scribal practice in these books. The variations in note formation and use of otiose strokes by scribes clearly having learned the same model hands are now visible enough to differentiate between them, no longer interfered with by the noise of show-through. The network of corrections and additional marks are now clearly visible, as are shifts in ink color, corrections and additions by later scribes, and areas of the page where a second scribe has written over the notes of the first.37

Parity

¶ 99Leave a comment on paragraph 99 0

Trying to ensure a level of parity between members of the team was sometimes a challenge, but changing from level adjusting and cloning to pattern filling and quick cleaning was of benefit in that respect. Cloning on level-adjusted or directly on original images resulted in a considerable disparity in appearance depending on the personal style of the editor.

¶ 104Leave a comment on paragraph 104 0

Restorer 1 (fig. 21b) cloned a clean part of the unedited manuscript image (fig. 21a) into the gaps between and around the existing notes (fig. 21d), while restorer 2 (fig. 21c) took pen strokes from an undamaged part of the page and cloned them over the top of the damaged ones (fig. 21e). The latter intervention, which obscures more of the original image, ironically looks more natural and unrestored than the work of restorer 1, which preserves more of the original pen strokes.

¶ 108Leave a comment on paragraph 108 0

Both techniques have validity, but the variability in the result is very obvious. The shift from the primary work being based upon level adjustment to that of pattern filling largely eliminated this disparity by providing a base image with a much more consistent background and edge definition, but more importantly, editors received an image in which much of what would previously have been cloned was already cleared with the pattern fill. The approach used by editor 1 (with refinement for the use of a pattern-filled starting point rather than a level-adjusted one) was chosen in preference to that of restorer 2, and as a result there was considerably less disparity between images prepared by different editors.

¶ 109Leave a comment on paragraph 109 0

A further difficulty in parity emerged over time, because both volunteers and in-house editors got better as they became more experienced. About halfway through the project we were able to purchase twenty-seven-inch retina screens, and these revealed imperfections that had not been particularly noticeable before. When all the images had been “completed,” therefore, the in-house team went through them all again: the first run-through was done by one person who could measure each image against a single notional paradigm. The images were graded, and the now two-person in-house team worked over the images again, bringing older work up to the standard of the newer work (sometimes having to discard the original work altogether and start again), and further ironing out differences between images edited by different members of the team.

¶ 110Leave a comment on paragraph 110 0

Color parity was not an issue: if a volunteer was working from a different operating system and screen, they would only see one range of colors, and no color-shift editing was done. As long as an editor’s work did not introduce sudden shifts in background color or ink from copying or cloning (obviated by the methods we were using), then even if the overall gamut looked different to different users, their editing would not result in a change in color between images. One user had Photoshop’s color-management system set up incorrectly, so it applied a different color profile on opening the image. The shift in gamut was dramatic enough to be instantly noticeable on the calibrated screens used by the in-house team. Once we had identified the problem and reapplied the correct color profile to the images from that editor there was no difference in color gamut between the images from this editor and any others.

¶ 111Leave a comment on paragraph 111 0

We delayed until last the question of what to do about pages that had been partially or completely overlaid with tissue repairs. The use of tissue had been occasioned by acid burn-through, but the shapes of notes and letters under tissue were far more difficult to establish accurately than elsewhere, and we did not always have the benefit of a photostat for these pages. With the final appraisal sweep, we established that work on these areas of pages was generally less reliable than elsewhere, so the decision was taken to leave the cloudiness on the image caused by the tissue so that users of the books would not only be able to see where the pages had been stabilized in this way, but would also have a visual signal that these areas should be read paleographically, with a slightly more jaundiced eye.

¶ 113Leave a comment on paragraph 113 0

Figure 22b. Mus.e.3. f. 6v (detail) showing areas of partial tissue overlay after editing (note the retention of erasures/smudges by the original scribe and the later pencil insertion of “35” over the group of rests).

Accuracy

¶ 114Leave a comment on paragraph 114 0

The final stage in the process was checking our work. This was a three-stage process. First, an editor who had not previously worked on the image examined it by switching the editors’ work on and off, looking at each word, note, and mark individually in the original image and the reconstructed version. In this way, omissions (e.g., missing dots or sharp signs) or incorrect repairs (e.g., black notes that should be void) could be caught.

¶ 115Leave a comment on paragraph 115 0

In the next stage, the repaired image was checked against a reliable edition to ensure it was performable: this alerted us to any reconstruction errors that were missed by the first checker, and it also allowed us to note errors made by the original copyist. If no edition exists, then members of the musicology team made one, ensuring that any scribal errors were noted editorially. Copyist errors were left as they are in the MS but footnoted in the publication, so that performers were not derailed by scribal faults.

¶ 116Leave a comment on paragraph 116 0

Finally, the images were used to perform from, and any problems encountered checked to determine whether they were editorial or scribal (and not simply performer error). With so many images, correcting proofs was a mammoth task, and some images went back through all three checking stages more than once, but this three-stage process enabled us to offer a reliable final reading.

¶ 117Leave a comment on paragraph 117 0

While we aimed for as consistent an approach as possible from our editors, consideration of the final title of the publication of the Sadler images in print (“collaborative reconstruction”) celebrates rather than ignores the fact that this work is bound to exemplify a variety of approaches. Each image received a final check from a single editor who corrected passages that appeared too far outside the norm, so changes between editors are now difficult to spot. The collaborative nature of the work is also manifest in the process through which individual images passed: the pattern fills may have been implemented by one restorer, retrieval of stave lines lost by pattern filling by a second, retrieval of fine detail in letters and notes (flicks) by a third, quick-cleaning by a fourth, cloning by a fifth; the result was checked for errors (accidentally removed dots, rests, etc.) and corrected by a sixth, seventh, and eighth, with the final sign-off by a ninth.38

¶ 118Leave a comment on paragraph 118 0

One suggestion made whenever we demonstrated the Sadler reconstruction work was that surely at least some of this process could be automated. In a way, we did automate by scripting the first level of pattern fills, but real automating (“couldn’t someone write an algorhithm . . ..”) is so complex that it would simply not have been practical for these images. It would require such a high level of human correction that the time and hours demanded would far exceed those in the mostly manual process we did use. Data from the manual process has, however, been retained, so that it can be used as training data for machine-controlled actions in the future.39 Various factors argued against a fully automated process:

Each page has different levels of show-through, and even on a single page some areas are worse than others; some pages also have partial or complete tissue overlay.

The color of both surface ink and show-through is not consistent from page to page, nor even across a single page.

There are places where the show-through is so difficult to separate from the surface ink that only very detailed close study can determine it.

Superimposing a flipped image of the reverse of a page is usually not helpful, as the reverse is as badly damaged as the front; lining the two up accurately and using one to cancel out the other is as difficult as separating the show-through and surface writing on a single face. The time required is not worth the effort. Restorers did use back-to-back images to help in working out what is show-through and what is not, and images of facing pages to check for offset, but most reported that they could work out what they needed more usefully by zooming in.

Whatever process was involved, human intervention was necessary to, for example, define the selected colors and degree of fuzziness—in fact this is the process employed in pattern filling, which is scripted with stopping points for human-mediated decisions where necessary.

Finally, any automated process is likely to generate errors, and in exactly the same way as correcting OCR (Optical Character Recognition) on an irregular typeface, it is likely that finding and repairing those errors would be as time-consuming as doing the task manually in the first place, although we did this checking even with human editing.40

¶ 120Leave a comment on paragraph 120 0

One final note: an imperfectly cloned image may look lumpy on screen, but when printed out at actual size, nearly all of that fuzziness or lumpiness disappeared. Only aiming for print output would have considerably eased our task, but with such a high-intervention activity it was essential that end users be able to compare our reconstruction with the original images, ideally by being able to switch the reconstruction on and off when superimposed on the master image,41 hence the dissemination of both original and reconstructed images also in the virtual medium. The print output would otherwise be extremely difficult to defend. Unfortunately, what looks acceptable at print size does not usually look acceptable in a zoomable environment where fine detail can be examined very closely. This means that we have been more critical of our work than we would have needed to be for a print-only output.

¶ 121Leave a comment on paragraph 121 0

Quite apart from returning a “lost” set of partbooks to usability, the quality of the images and intimate involvement with the inks, scripts, and scribes necessitated by the reconstruction process allowed us to look more closely at factors, such as scribal practice, that could not be seen on the original manuscripts.

¶ 122Leave a comment on paragraph 122 0

Most valuable, I suspect, in this collaborative process was the involvement of a very wide constituency of users who became invested in the success of the project as active and meaningful contributors to the research outcome. Most of the volunteers would otherwise never have had contact with these sources, either physically or virtually. The work of libraries in curating these often-difficult sources is largely unnoticed by the public, even when they are digitized, but interaction with the Tudor Partbooks project has introduced a new public to both the plight of early manuscripts and the value of digitization in their protection and the dissemination of the information they contain. Giving volunteers a stake in the research process also created a relationship between scholars and end users of the music that usually does not exist. Volunteers commented:

“Working on the digital restoration makes me acutely aware of how fragile our grasp on the historical past really is.”

“It’s new, interesting, and challenging, and I’m enjoying the opportunity to be involved in something I would not otherwise have encountered.”

“What I like best about the reconstruction process is assisting in rescuing an old and beautiful artefact from damage. It is akin to cleaning and restoring a painting or cleaning an old building, and requires sympathy and the same level of care.”

“I work in graphic design but have had a life-long interest in the Tudors and their music, so the project to restore the Sadler partbooks is a perfect marriage of all those skills and interests. There is enormous satisfaction in seeing a representation of how the original partbooks must have looked gradually emerging from these damaged pages.”42

¶ 124Leave a comment on paragraph 124 0

The introduction of the volunteer workforce to this project had clear benefits for public understanding of research, but it equally benefited the academic team, for whom the exercise of refining our reconstruction process to encompass the volunteer workforce improved the output, while the work of the volunteers progressed the project considerably.

¶ 125Leave a comment on paragraph 125 0

What then, does the final result represent? Could it be said to depict the manuscript at an earlier point in its life? Is this how it would have looked today if the scribes had used less acidic ink? The answer is of course that we cannot know. Any natural disparity between leaves that exist now, as a result of the ink degradation, is lost and no longer evident to the eye,43 although differences in ink color that might indicate variations in recipe or the work of different scribes are visible. Grubbiness on pages from handling or exposure was retained by the use of an area of the page being edited as the basis for the pattern fill, and the edges of the pages were left as they were, so finger marks and other handling evidence is still visible.44

¶ 126Leave a comment on paragraph 126 0

I have asserted elsewhere that in an ideal world, digitally edited images should always be reproduced alongside the unedited form,45 both so that the end user is in no doubt that the edited image is edited, but also so that they can see the original—i.e., the master RGB image before editing—for themselves, allowing them to reach their own conclusion about content. Since reproducing both “before” and “after” versions in print for the Sadler books was far too costly, only the reconstructed images will be published in print, but a range of unedited images will also be reproduced in appendices to each partbook.46 The introductory study will ensure that readers are aware that the unedited images can be accessed online so that anyone questioning the appearance of a reconstructed page can check it against a zoomable original.

¶ 127Leave a comment on paragraph 127 0

The Sadler Partbooks project has demonstrated that a project of this type and magnitude is both possible and useful: there was a prevailing belief that the damage was too advanced and extensive for much of the text to be retrieved, and certainly at first sight of the RGB images this seemed to be so. We were fortunate to have the 1920s photostats that were extremely useful in deciphering some of the worst-affected areas of pages, but even without these, the quality of the high-resolution imaging was sufficient to render almost all the notes readable with a high level of confidence. The few infrared images indicated the value of this additional imaging tool: had we been able, we might have wished to have infrared images of all the leaves, as it might have speeded up some of the more difficult readings, but the limitations on handling the manuscripts made this impossible. The Keeper of Manuscripts only allowed us to try infrared imaging on the condition that it was for a very limited number of leaves, as the RGB imaging done by the Bodleian studio had been intended to be the only handling of the leaves for the forseeable future. Given that we had been able to read almost all of the music without infrared imaging, there was little justification for pressing for more extensive infrared work.

¶ 128Leave a comment on paragraph 128 0

Looking at this work, the prospects for other primary sources damaged in this way are encouraging: certainly the need for high-resolution imaging is manifest, and it should be undertaken sooner rather than later. Reconstruction at this level is, however, immensely time-consuming, and requires editors with a particularly meticulous (if not obsessive-compulsive) mind-set and highly ethical critical approach.

A collection of ink recipes is to be found in David N. Carvalho, Forty Centuries of Ink (New York: Banks, 1904. Reprinted 2007). A list of early modern recipes from Anon., A Booke of Secrets: Shewing diues waies to make and prepare all sorts of Inke, and Colours. (London: Adam Islip, 1596) is partly transcribed and available online at “Early Modern Ink Recipes,” Early Handwriting 1500-1700 (online course), accessed October 28, 2017, https://www.english.cam.ac.uk/ceres/ehoc/intro/inkrecipes.html. The following recipe, which appeared in a printed writing tutor seems to be fairly representative of early modern inks:

To make common Inke of wine take a quart

Two ounces of Gumme let that be a part,

Fiue ounces of Gals, of Copres take three,

Long standing doth make it the better to be.

If wine ye do want, raine water is best,

And then as much stuffe as aboue at the least.

If Inke be too thicke, put vineger in:

For water doth make the colour more dim.

“Rules Made by E. B. for Children to write by,” in E. B., A New Booke, Containing All Sorts of Hands Usually Written at This Day in Christendome (London: Richard Field, 1571). [↩]

From the University of Chicago Library’s Conservation Department: “Under Covers: The Art and Science of Book Conservation,” accessed October 28, 2017, https://www.lib.uchicago.edu/e/webexhibits/scienceofconservation/irongallink.html: “Iron gall ink (IGI) deterioration is one of the most troublesome problems in the conservation of cultural heritage. IGI is produced by the reaction of tannic acid with an iron salt, such as ferrous sulfate (FeSO). The solution is initially colorless, but when it is applied to paper and exposed to air, it oxidizes, forming ferric tannate, and turns brown. Unfortunately, the excess iron sulfate, or Fe(II), in iron gall ink can cause severe degradation in the paper or parchment support beneath it by accelerating the oxidative breakdown of the surface through Fenton’s reaction. IGI was in wide use from the 9th century until the 19th century, and there are innumerable objects that display the signs of iron gall ink deterioration.” [↩]

Oxford, Bodleian Library Mus. Sch. e.376–81, a set of six books of (in total) 1241 music pages; and Vatican, Biblioteca Apolstollica Vaticana, Cappella Sistina 14 (1472–81) come to mind, particularly among the music sources. Some of the outer leaves of one of the smallest manuscripts of the Gospels, the Michigan, Ann Arbor Codex 2364 (or shelfmark MS 182), dating from c. 1300 are barely readable thanks to burn-through and lacing. The number of manuscripts affected by acid ink increases from the time when paper became more affordable, with a corresponding increase in amateur scribes mixing inks from materials easily to hand. [↩]

An example of deleterious use of gauze is the early sixteenth-century manuscript Florence, Biblioteca Nazionale, Panciatichi 26. I am grateful to one of the reviewers of this article for directing me to this manuscript. [↩]

Tudor Partbooks: The Manuscript Legacies of John Sadler, John Baldwin and their Antecedents (www.tudorpartbooks.ac.uk) in collaboration with DIAMM (the Digital Image Archive of Medieval Music), funded by the Arts and Humanities Research Council (UK). [↩]

Formerly Civico Museo Bibliografico Musicale; earlier Biblioteca del Liceo Musicale. [↩]

Margaret Bent, Bologna Q15: The Making and Remaking of a Musical Manuscript, Introductory Study and Facsimile Edition 2 vols. (Lucca: Lim Editrice, 2008. Awarded the American Musicological Society’s Claude V. Palisca Award for 2009. [↩]

Each image included an industry-standard color scale, and color profiling was carefully managed from the point of photography through to the press output. [↩]

All image processing work undertaken by DIAMM uses Adobe Photoshop. Color profiles are embedded in the image, and color scales are used in all images taken by DIAMM to ensure correct reproduction. A new version of the image is saved in .psd format (Photoshop’s proprietary format), with the editing work in layers allowing the processes to be examined, refined, deleted, or repeated. [↩]

At the time we did not have permission to put the full resolution images on the DIAMM website, but they are available now, fully zoomable. The images include the color scale so that color shifts caused by screen color-cast can be assessed. The images are at I-Bc Q.15, DIAMM, accessed October 28, 2017, https://www.diamm.ac.uk/sources/117/#/images. The user must be logged in (registration is free) to use the image viewer. [↩]

Julia Craig-McFeely, “From Perfect to Preposterous: How Digital Restoration Can Both Help and Hinder Our Reading of Damaged Sources,” in Cantus Scriptus: Technologies of Medieval Song.Proceedings of the 3rdAnnual Lawrence J. Schoenberg Symposium on Manuscript Studies in the Digital Age, ed. Lynn Ransom and Emma Dillon (Piscataway: Gorgias Press, 2012): 125–41; Julia Craig-McFeely, “Finding What You Need, and Knowing What You Can Find: Digital Tools for Palaeographers in Musicology and Beyond,” in Kodikologie und Paläographie im digitalen Zeitalter 2 – Codicology and Palaeography in the Digital Age 2, edited by Franz Fischer et al. (Norderstedt: Books on Demand, 2010): 307–39. [↩]

The PhaseOne PowerPhase FX gave us images of the MS at 845 dpi at real size (the pages were 23.5 x 29 cm), yielding 8-bit RGB files of around 220 MB. [↩]

A number of terms are used to describe this type of damage: “show-through” (in which the writing on the back of the page is visible in a paler color); “bleed-through” (in which the ink starts to bleed through the paper and is difficult to separate in color from the surface ink; “burn-through” (in which the paper appears burned by the ink and may disintegrate altogether). “Lacing” describes the effect of holes burned through between narrow pieces of surviving paper or parchment. There is overlap between show-through and bleed-through depending on perception, and in this paper show-through is used to encompass both terms. [↩]

Most particularly the imposition of bar lines, which can artificially force stresses where a contemporary performer would not have placed one, or impose a duple structure on note groupings that would originally have been seen (without the bar lines) as triple. See Margaret Bent, “Early Music Editing, Forty Years On: Principles, Techniques, and Future Directions,” in Early Music Editing: Principles, Historiography, Future Directions, ed. Theodor Dumitrescu, Karl Kügle, and Marnix van Berchum (Turnhout: Brepols, 2013): 241–71. [↩]

Work has already begun on Oxford, Bodleian Library Mus. Sch. e.376–81. [↩]

There are eighteen surviving “sets,” only nine of which are intact. Of the remaining nine sets, seven are lacking one book out of four or five, and two sets lack three out of five. [↩]

The following nine partbooks sets are missing one or more books: Oxford, Christ Church Mus. 979–83, known as the “Baldwin” partbooks (1580; 5 out of 6 surviving); Cambridge, St John’s College K.31 and Cambridge University Library Dd.13.27, “UJ” partbooks (1530; 2 of 5 surviving); London, British Library Roy App 74–76, “Lumley” partbooks (1550; 3 of 4 surviving); Oxford, Bodleian Library Tenbury 1486 and Berkeley private collection motets “Willmott/Braikenridge” partbooks (1591, 2 of 5 surviving); Oxford, Bodleian Library Mus. Sch. e.420–22, “Wanley” partbooks (1549; 3 of 4 surviving); Oxford, Bodleian Library Tenbury 389 and McGhie private collection partbook (1590; 2 of 5 [estimated] surviving ); Cambridge, Peterhouse 471–74, “Henrician” partbooks (1539–41;, 4 of 5 surviving). Later sets include the two Peterhouse Caroline sets that were considerably more incomplete until a recent discovery of a pile of manuscripts behind some sealed paneling: “Former Caroline Set” MS 47 (1625–40; 7 of 10 surviving); “Latter Caroline Set” MS 42 (1625–40; 7 of 8 surviving). [↩]

As well as incomplete sets of books, twenty-three orphan books survive from the Tudor period, some only as a few fragmentary leaves from bindings, many of which are probably witnesses of a larger corpus of lost complete partbook sets, although some were evidently never part of a larger set. [↩]

These are two dates written in the books. The first study of the books was David G. Mateer, “John Sadler and Oxford, Bodleian MSS Mus. e. 1–5,” Music and Letters 40 (1979): 281–95. Sadler has also been credited with writing a second set of partbooks, of which only two books survive, the Willmott and Braikenridge partbooks (see note 18 for shelfmarks). The matter of scribes is examined in detail in Julia Craig-McFeely, James Burke, and Matthias Range, The Sadler Partbooks: A Collaborative Reconstruction. (Oxford: DIAMM Publications, forthcoming 2018). [↩]

Their history is detailed in James Burke, “John Sadler and the ‘Sadler’ Partbooks (GB-Ob MSS Mus.e. 1–5)” DPhil diss., University of Oxford, 2016. [↩]

Professor Warwick Edwards attempted unsuccessfully to see them in the early 1960s, as an undergraduate. [↩]

The exact date of the conservation work is unknown. At the time, detailed records of conservation work were not kept by the library, and the identity of the conservator is not certain. [↩]

The author, Katherine Butler, and Laurie Clifford-Frith. [↩]

Paper publication is still preferred by performers, and particularly in the case of partbooks, onscreen real estate is still a barrier to studying partbook repertories with ease. See Julia Craig-McFeely, “Digital Man and the Desire for Physical Objects,” Early Music, 40th Anniversary Issue, 41, no. 1 (February 2013): 131–33, https://doi.org/10.1093/em/cas147. [↩]

“Restoring John Sadler’s Partbooks,” Tudor Partbooks, http://www.tudorpartbooks.ac.uk/getinvolved/restoringjohnsadlerspartbooks/; Katherine Butler and Laurie Clifford-Frith, “Digital Restoration of John Sadler’s Music Partbooks: Introductory and Training Videos,” Tudor Partbooks YouTube Channel, accessed October 30, 2017, https://www.youtube.com/playlist?list=PLVIQO75esTNYJTEW180Kj7LtmEBN8hMEt (these continue to be updated from time to time with revised versions of the original videos, and films showing new or more advanced techniques for experienced Photoshop users). These are currently being used, along with images from the DIAMM collection, by the multimedia laboratory of the Department of Cultural Heritage, University of Bologna (www.framelab.unibo.it) for their course, Image Processing and Virtual Restoration, part of the Library and Archive Science Degree. [↩]

An excellent discussion of reconstruction is to be found in M. R. Rambaran-Olm, “The Advantages and Disadvantages of Digital Reconstruction and Anglo-Saxon Manuscripts,” Digital Medievalist. 9 (2015), accessed October 30, 2017, http://doi.org/10.16995/dm.49, with an extensive bibliography encompassing arguments about terminology and policy. [↩]

London, Senate House Library, Tudor Church Music Box 48. We are immensely grateful to the department of Special Collections in Senate House Library and their administrator, Charles Harrowell, who have preserved these photostats and kindly allowed me to visit them with a high-resolution scanner to make scans for the reconstruction project. They have also given permission for the scans of the photostats to be made available online alongside the original and reconstructed images, at diamm.ac.uk. Information about the TCM collection may be seen here: “Tudor Church Music Collection,” Senate House Library, University of London, accessed November 1, 2017, http://www.senatehouselibrary.ac.uk/our-collections/special-collections/printed-special-collections/tudor-church-music-collection. [↩]

Tudor Partbooks channel, accessed October 30, 2017, https://www.youtube.com/channel/UCmfnjmwu8Bytz98zlcxgpOg. The DIAMM project has used Adobe Photoshop since the early days of the project in 1998, when this software offered tools in advance of those available in competing packages. Much of Photoshop’s functionality is now available in other software, including the free software, GIMP, but we retain the use of Photoshop since the user interface makes the work much faster than with other software, and integration with graphics tablets is optimized with Photoshop (one of our volunteers, an experienced user of GIMP, was defeated by the difficulties of employing the comparable tools). Photoshop also has the advantage of excellent support and updates, so there is seamless integration with operating systems and upgrades between hardware and other software such as graphics-tablet drivers. The training videos available include work on the Sadler books and advanced training videos on another set of books, which show the advanced techniques and variety of tools in use in considerable detail. [↩]

Options were shown online and at numerous talks given by the team with opportunities for members of the public, academics, and performers to choose a preference, but no clear “winner” emerged. It was evident that whatever we did would be unsatisfactory to someone. [↩]

Craig-McFeely, Burke, and Range, The Sadler Partbooks; Craig-McFeely, “From Perfect to Preposterous”. Papers: Julia Craig-McFeely, “Forensic Recovery with Digital Documents” (presented at the Plainsong and Medieval Music Society Study Day, Worcester Cathedral, Worcester, England, May 13, 2017); Julia Craig-McFeely, “Exultation and Despondency: The Digital Reconstruction of the Lost Partbooks of John Sadler (Bodleian Library Mus. e. 1–5)” (presented as part of the Bodleian Library Centre for Digital Humanities “Research Uncovered” series, Bodleian Library, Oxford, England, February 5, 2016); Julia Craig-McFeely, “‘I saw it on CSI . . .’: Forming Digital Technology for Humanities Research,” presented as a part of the workshop “Describing, Analysing and Identifying Early Modern Handwriting: Methods and Issues” (organized by the Centre for Early Modern Studies and Merton College History of the Book Group, with the support of the Bodleian Library Centre for the Study of the Book, Merton College, Oxford, England, April 25, 2013). [↩]

There is abundant literature on word underlay (see for instance David Mateer, “John Baldwin and Changing Concepts of Text Underlay,” in English Choral Practice, 1400-1650, ed. John Morehen (Cambridge: Cambridge University Press, 1995): 143–60; or the excellent collection of essays in Don B. Harrán, Word–Tone Relations in Musical Thought: From Antiquity to the Seventeenth Century (Neuhausen-Stuttgart: American Institute of Musicology, 1986), including the evidence provided by the Sadler books. Vertical alignment is certainly not uncommon, and most of the contemporary partbooks display the same concern for underlay positioning. The Sadler books are unusual in that the scribes rarely utilize repeat marks to indicate text repetition (://:). The music and text were clearly copied concurrently, evidenced by corrections where the scribe has blotted out his error and written over it, with both text and music corrected. [↩]

Edmund Fellowes, William Byrd (London: Oxford University Press, 1948). [↩]

To date, seventeen scribes have been isolated; a full discussion is to be included in the introduction to the published version of the images: Craig-McFeely, Burke, and Range, The Sadler Partbooks. [↩]